Driven by financial needs, a competitive managed services market, enhanced remote network management tools and demanding business requirements, organizations are increasingly delegating control of their network -- including carrier services and service management -- to third-party providers.

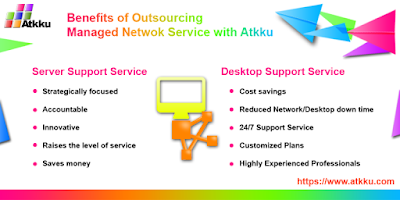

The potential benefits of implementing fully outsourced managed services include reduced cost of ownership (COO), access to external expertise/intellectual property, improved operational performance and abstracted management. A holistic outsourced model can also allow organizations to better allocate internal resources on strategic projects and core business activities.

However, relinquishing end-to-end responsibility for the network infrastructure without systematically addressing the service delivery model exposes the organization to significant financial, operational and strategic risks. To mitigate these risks and maximize results, organizations should consider the following best practices:

|

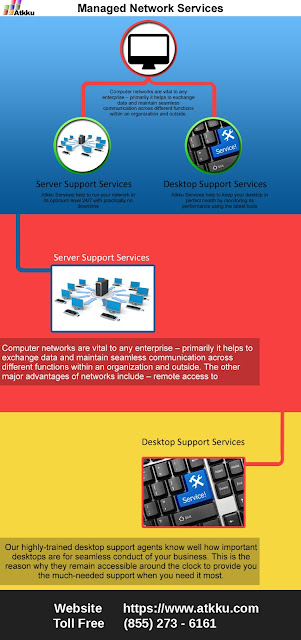

| Outsourcing Benifits with Atkku |

1. Simplify the process for provider and service tower termination.

First, structure the contract with minimal bundling so specific services can be terminated without impacting others. For example, in a recent project involving an offshore provider, the contract was designed with separate service towers, e.g., Managed Router Services, Network Support Services (LAN, telephony, wireless infrastructure), Dedicated on-site support, Data Center Management Services and Contact Center Management Services.

Second, retain broad rights for termination for cause, and allow for termination due to provider performance issues.

Third, establish specific termination processes during contract negotiations and build them into the agreement. Explicitly address all aspects of termination, including termination-related charges, provider wind-down support, provider obligations after termination, and the transfer of assets (equipment, software), contracts and even provider personnel. It is always best to negotiate termination details while you still have leverage (i.e., before the contract is signed), as it will make termination easier with fewer costs and operational/legal difficulties. Waiting to negotiate these terms until you're seriously contemplating or initiating termination is too late as the provider will likely be less disposed to cooperate.

Knowing that well-defined termination processes are in place with service towers that can be peeled off (reducing provider revenue) makes termination a more effective and credible stick to use if the provider is not performing.

2. Establish clear SLAs with credits defined in the contract.

SLAs should ramp up based on the service issue duration, severity and number of defaults. Additionally, they should have both financial and non-financial remedies requiring:

• Rapid escalation processes to the provider's senior/executive management levels.

• Formal quarterly and annual reviews with the provider's executive management level.

• Root cause analysis for every "Severity 1" incident.

• Detailed remediation plans.

3. Avoid pushing too hard on price to the exclusion of all else.

As pricing decreases below an appropriate level, the overall service and provider relationship becomes less tenable, risking inflexibility and "work to rule," substandard resource assignment, and a poor overall experience. Squeezing the provider too hard on price creates the risk of having the provider look for opportunities to gain back margins after the contract is signed. For example, they may eliminate promised resources, swap dedicated/on-site roles for cheaper shared/off-site resources (e.g., project management), or persistently demand change orders for services not explicitly covered by the contract.

Still, if cost reduction is the main driver and a company plans to push hard during price negotiations, it will need to establish provider management once the deal is done to strictly enforce SLAs and monitor performance; otherwise, it can expect "nickel-and-diming" and performance erosion from providers.

4. Engage vendor management early and throughout the engagement. In general, vendor management involves delivering:

• Support in developing sourcing/termination strategies.

• Contract management (T&C/SLA/KPI compliance, performance reviews).

TOP NEWS

Microsoft campus building

Microsoft's Solair acquisition could expand its IoT services

1iphone inhandhome

India has shot down Apple's plan to sell refurbished iPhones

sales tax

South Dakota law will be a test case for a US Internet sales tax

• Customer-vendor relationship management (from onboarding to divesting).

• Financial management.

• Risk management.

With fully outsourced agreements, organizations are highly dependent on the provider and the quality of service delivery. Still, companies often underestimate the value of vendor management. As a result, investment in and commitment to this critical function is frequently lacking with the consequence being poor service delivery, chronic service issues, misallocation of financial resources and unhappy users.

Keeping vendor management involved during each step of the sourcing/contracting process is essential given their vital role in managing provider performance and value creation during the contract term. This includes involving them in sourcing and termination strategies before starting down the road to a fully outsourced network.

5. Think operationally when developing your sourcing strategy and negotiating the agreement.

For example, document the lifecycle of a circuit/service from ordering to expiration/termination, including every permutation (escalations, early cancellation, non-acceptance, poor performance); then, ask the provider to identify and confirm time frames for each process step.

Keep in mind that not all process steps are necessarily key performance indicators (KPIs), nor must they carry financial credits. However, all steps should be clearly documented in the contract. Formally documenting and monitoring KPIs will encourage provider performance and keep each process step moving. Of course, this chain of events is only as good as its weakest link, and the provider will likely exploit any action without an explicit and well-documented KPI to "pad" the other process time frames.

6. Maintain internal ownership of IT network architecture/design services.

Ownership of these operational functions better positions IT to align the network with business requirements. Further, ownership builds critical internal expertise that can aid with network and process optimization, infrastructure refresh decisions and, if needed, support the transition of the network and managed services to another provider.

Finding the right balance between leveraging the provider's resources/expertise while developing institutional knowledge may be difficult, but it can be accomplished, for example, by assigning IT responsibility for managing documentation requirements (e.g., as builts, configuration, network diagrams), negotiating the ability to hire provider staff assigned to the account, or requiring asset transfer at contract termination.

7. Carefully consider asset ownership.

Fully outsourced deals historically use an "asset-heavy" model wherein asset ownership is either transferred by the customer to the provider or provided directly by the provider. This model is leveraged by both customer and provider for many reasons, including:

• One-time cash payment from provider to customer for existing assets.

• Reduced capital funding in favor of operating expenditures.

• Predictable cost stream and improved return on asset (ROA).

• Eliminates need for separate maintenance agreements; fewer providers may simplify provider management.

• Provider organizational scale/expertise/remote tools to drive ongoing operational efficiencies and cost reductions.

• CPE capacity planning responsibility assumed by provider (e.g., if circuit upgrade requires router upgrade).

• Providers encourage it -- asset ownership is a perfect way to create "stickiness"; once the provider services are in place, the effort/cost to take back asset ownership is perceived by the customer (correctly or not) to be prohibitive.

Despite these benefits, there is a growing preference for an asset-light model because of alternative lease-back financing, which allows an organization to own the asset without significant capital outlay or giving up asset control. Further, improvement in remote infrastructure management (RIM) tools and virtualization technologies mean customers can more easily deploy and use these technologies. These enhanced RIM tools also allow offshore providers to compete more effectively against onshore providers. [Also read: "Pros, cons of remote infrastructure management"]

If asset ownership is the provider's responsibility, asset refresh commitments by the provider must be established during contract negotiations. Keeping critical IT assets current during contract term is an important to include in the final agreement. Additionally, companies should restrict the provider from purchasing or leasing assets from its affiliates or subsidiaries; such relationships can obscure the underlying cost of assets, limit visibility into asset inventories and services billing, and make service termination more difficult.

Ultimately, the pros and cons of asset ownership must be carefully weighed by both IT and finance to determine which asset model makes the most sense.

8. Bundle transport services or negotiate a separate transport agreement?

Bundling transport with the managed services has the upside of giving responsibility for these time-consuming tasks to external parties (e.g., orders, disconnects, billing management, troubleshooting). Transport contract negotiations are also avoided with "only" the managed services contract to worry about.

The downside? Bundling transport with managed services means carrier costs are no longer transparent. Cost visibility and financial control are diminished as transport billing becomes a lump charge on the managed service invoice.

In addition, bundling often means the IT organization becomes further removed from network services. As a result, internal expertise about transport erodes further, thereby increasing IT's dependence on the provider. As with ownership of IT network architecture/design, maintaining an intimate knowledge of the network is critical to delivering real value to the business units.

9. Plan extensively before migrating to a fully outsourced environment.

Numerous activities must take place before executing the plan. In-depth discussions with the business units are needed to understand their requirements and future plans. Developing detailed site migration schedules, financial management processes, data center/site readiness plans, resource plans, communication plans and process management (incident, problem, change, asset management, etc.) must be ready before rolling out managed services.

Ongoing real estate activity, including pending M&A, must be understood and factored into the migration plan. Transition to a fully outsourced model requires significant planning to properly execute by both the enterprise and provider partner(s) for the ramp-up (and as needed wind-down) to a 100% outsourced environment.

Migrating critical IT network infrastructure and services to a fully outsourced environment is complex and risky but also has the potential for significant cost and efficiency benefits. Mitigating the risks requires preparing for and looking beyond the sourcing event by developing a sound sourcing strategy, negotiating strong, flexible contract terms, having appropriate provider management, and continuous involvement by IT. By taking these steps, organizations can help ensure the managed services provider delivers real value to their business and customers.

|

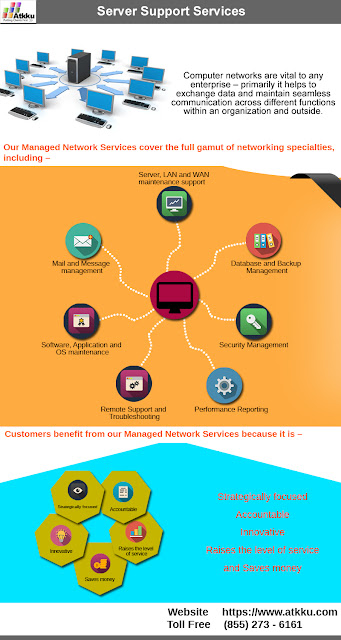

| Server Support Service Benefits |

Cost-effective Network Service : http://www.atkku.com/Managed-Network-Services.aspx

Dial For free : (855) 273-6161

Keep in Touch : marketing@atkku.com